Orby AI Automation

Delivered · Web App · 2023

Boost productivity for document processing workflow with efficient and responsible

AI automation.

CLIENT

ORBY AI

project type

Web

AI tool

Team

1 Project manager

3 UX Designers ←

3 Engineers

Contribution

User journey mapping

High-fidelity design

Design system development

TimeLINE

8 weeks

Jan-Feb 2023

project context

Orby AI is a web-based AI automation tool to enhance document handling productivity for enterprise clients. In this version, our client's focus is on the contract handling use case for financial teams. As a member of the design team, my primary responsibility is to translate AI's capabilities into a tangible and enhanced user experience.

AI automation creates a new landscape for enterprise document workflow

It can empower document workflow by reducing maintenance costs compared to traditional methods, handling a wider range of tasks beyond rule-based processes, and resulting in more efficient document processing.

Input

Learn from user actions

Automated document processing

Improve from user validation

Automated Processing

Output

End User:

Financial Analysts

“I support the finance team to review documents and file reports efficiently and accurately.”

Learn users pattern

Automate documents faster

How can we establish trust in AI suggestions from the outset?

AI suggestions aren't always perfect. With more user input and feedback, accuracy improves over time. However, early failures might lead to user churn.

How to design an intuitive workflow to speed up processing?

After AI completes its tasks, users have to spend time verifying its accuracy. A quick and efficient experience is essential for the success of the product.

From AI's technical feasibility to a user-friendly product, there remain unresolved challenges:

Final Outcomes

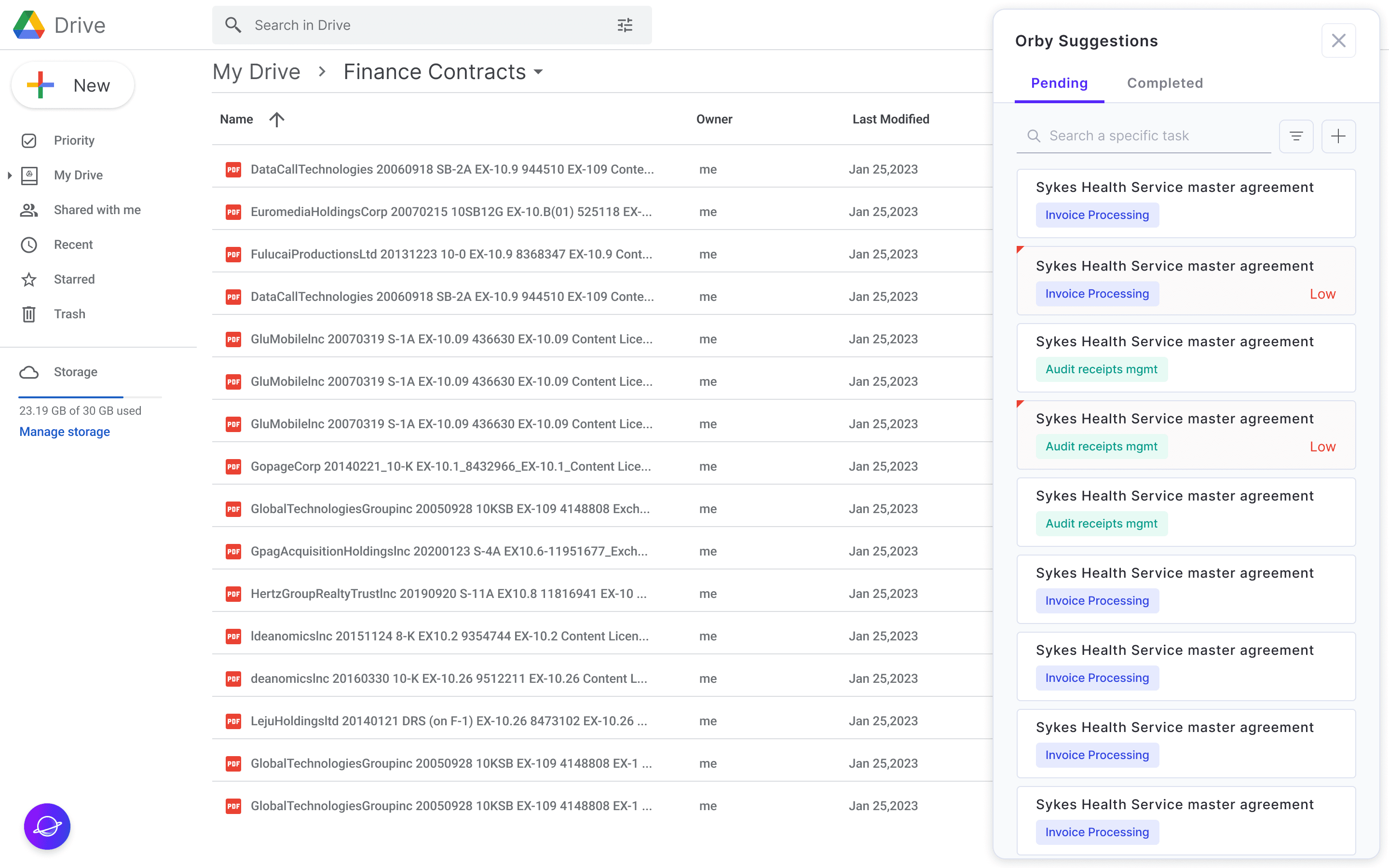

Provide visibility into the process and make the training process less effort to complete

Guide user through training process

Lack of intuitive flow to help expedite the processing Lack of intuitive flow to help expedite the processing

Automated document processing without the need for scripting

A clear navigation pattern for validation and identify problems

Validate with better accuracy and efficiency

research

Started with limited knowledge in use case and AI automation, we navigated the problem space through research:

Keep increasing domain knowledge

To understand how to connect business goal and technical feasibility with design, we researched on AI Automation’s strengths and limitations.

7 competitive Analysis

We conducted an analysis of relevant products, including RPA, contract processing, and document assistants, to learn from existing patterns, user behaviors and identify areas for optimization.

2 workshops for aligning expectations

We got stakeholders’ perspectives over the product expectation and narrowed down the design scope on key problems.

Key insights

AI cannot achieve perfect accuracy, and it's essential to ensure its responsible use by users.

Clear navigation pattern assists users in prioritizing key operations and enhances efficiency.

Stakeholders aim to ensure that new users can easily grasp and maximize the product's capabilities.

design process

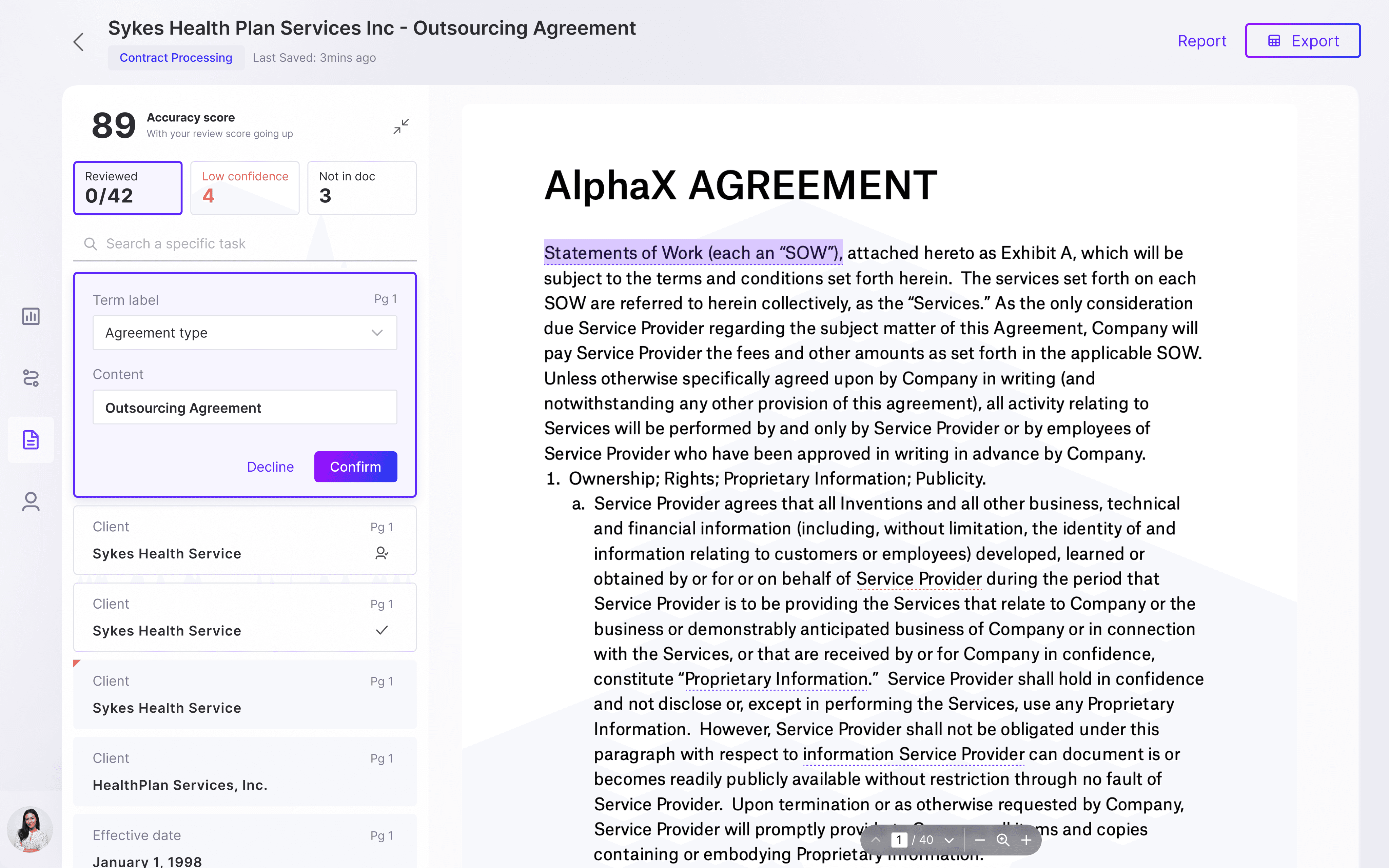

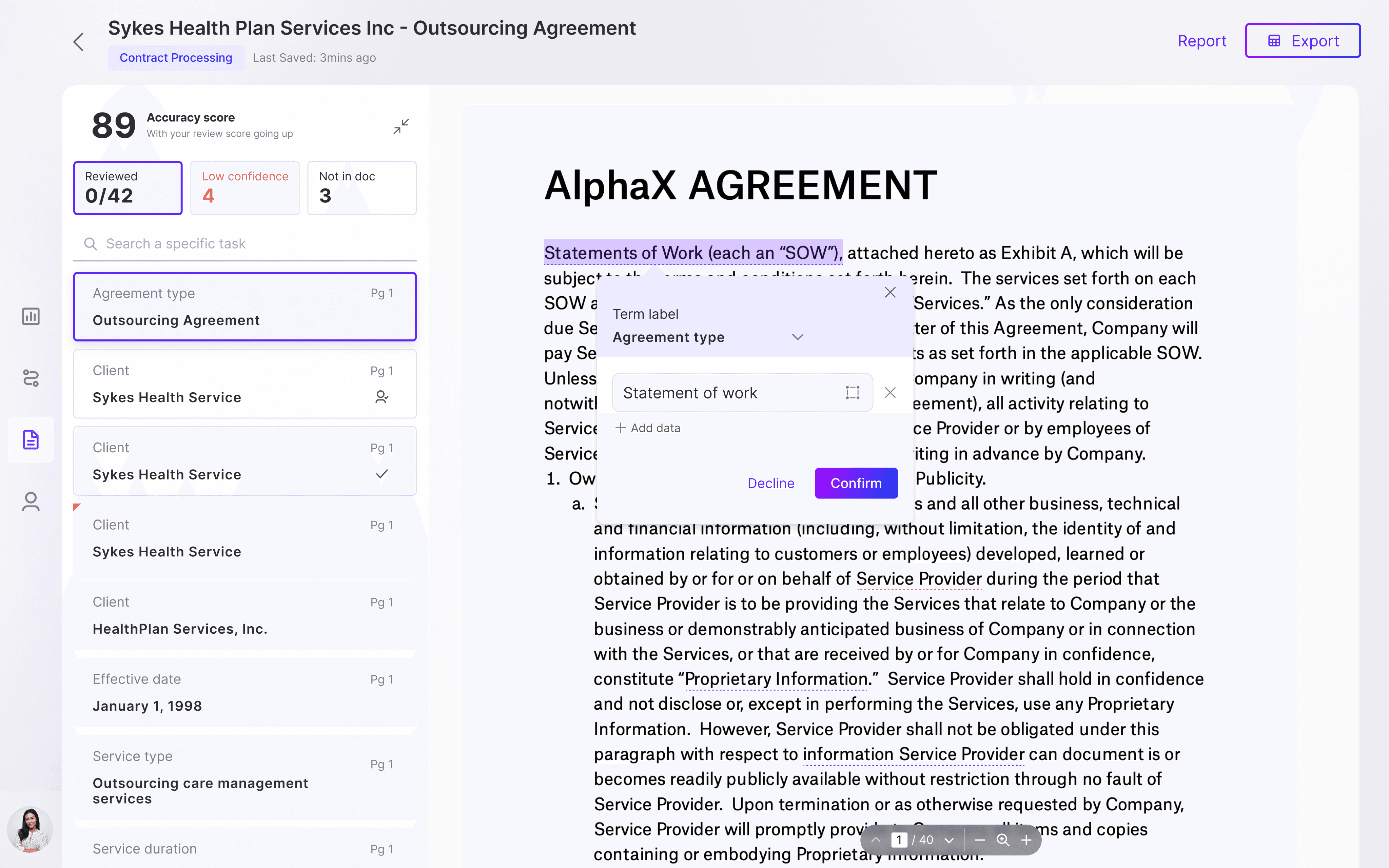

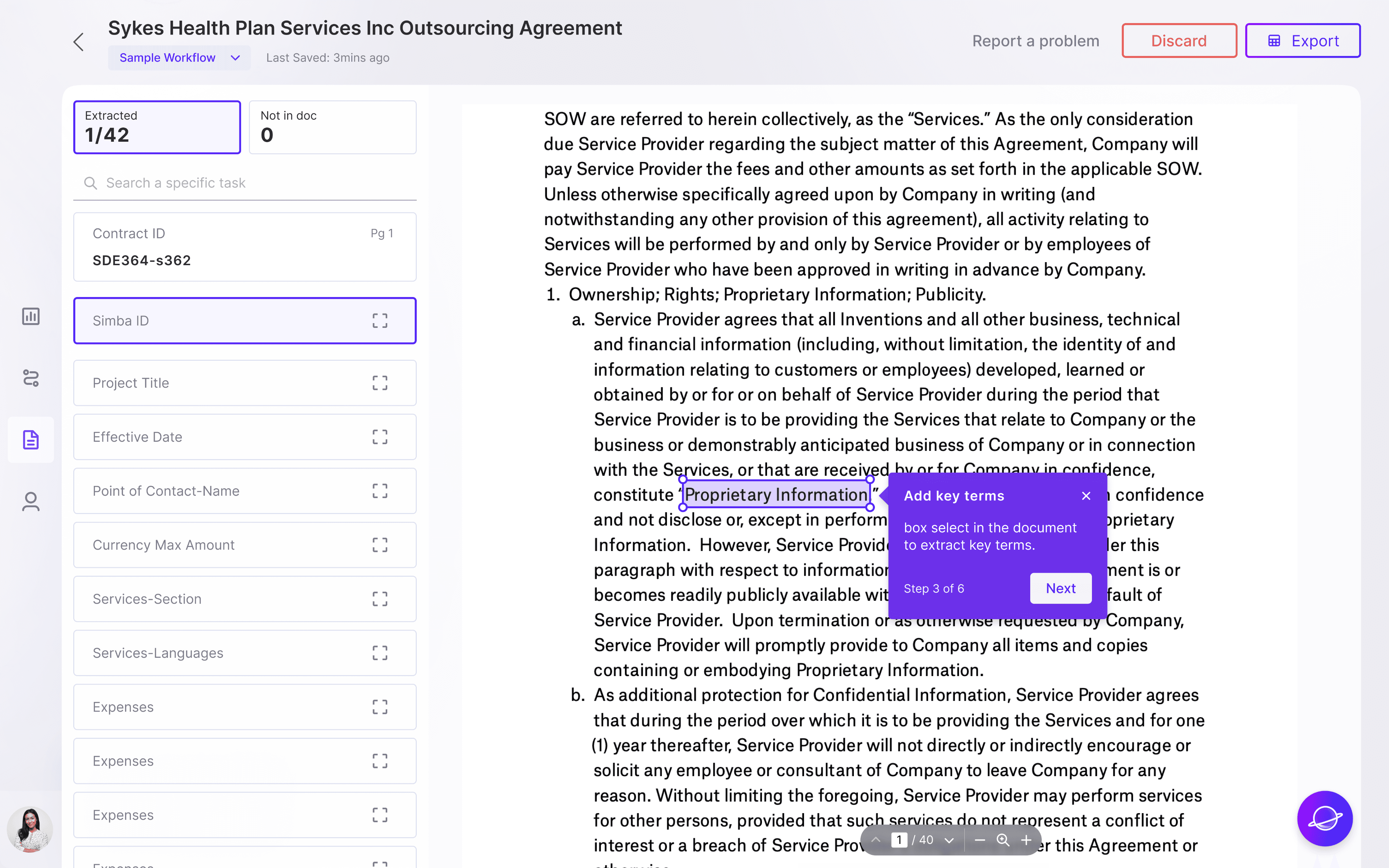

Make the validation process streamlined

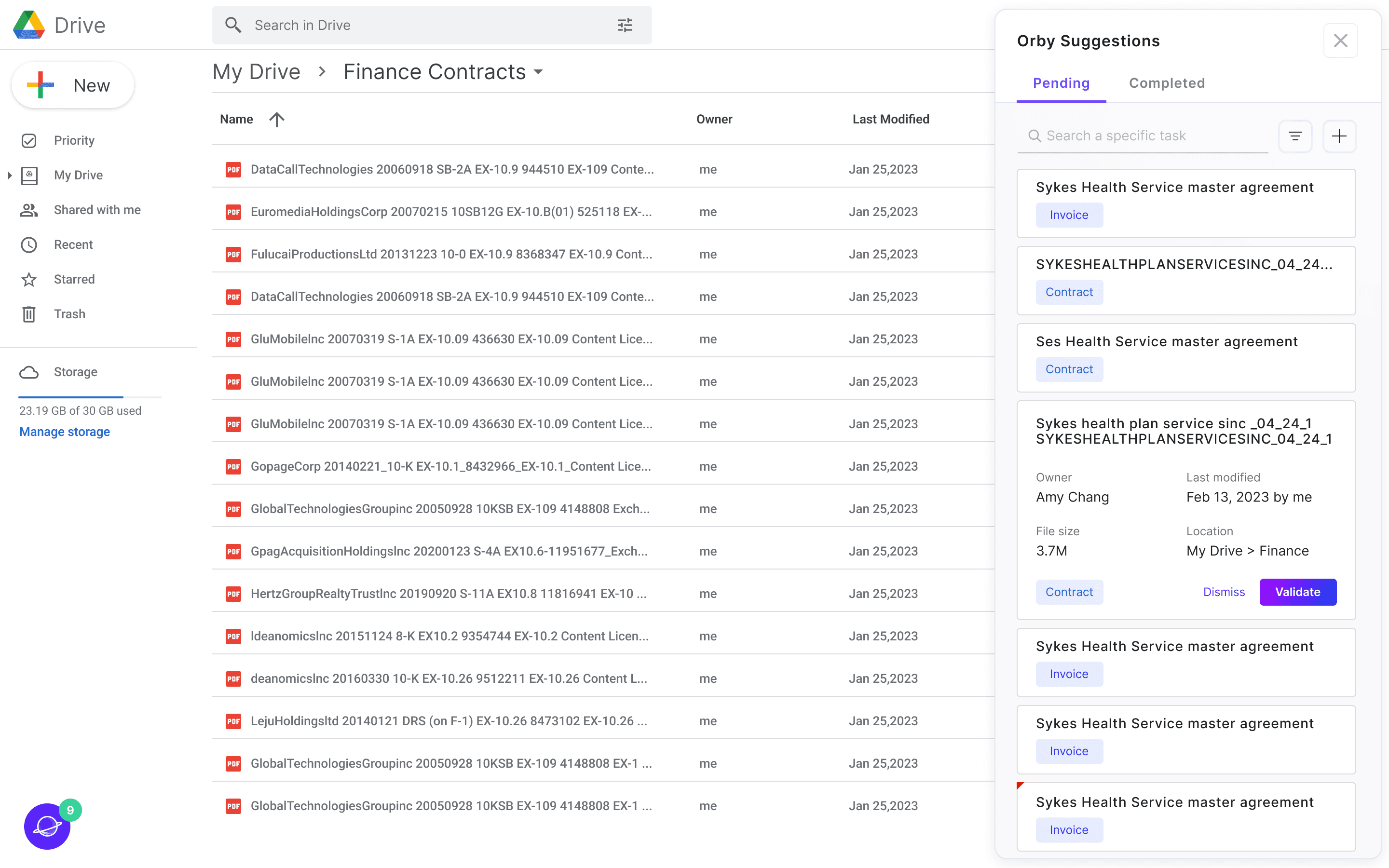

Based on the competitive analysis, we discovered that most document processing tools share a common layout that users are comfortable with. While there is no need to invent a new pattern, a few changes to the navigation could significantly enhance the speed of finding and editing incorrect suggestions.

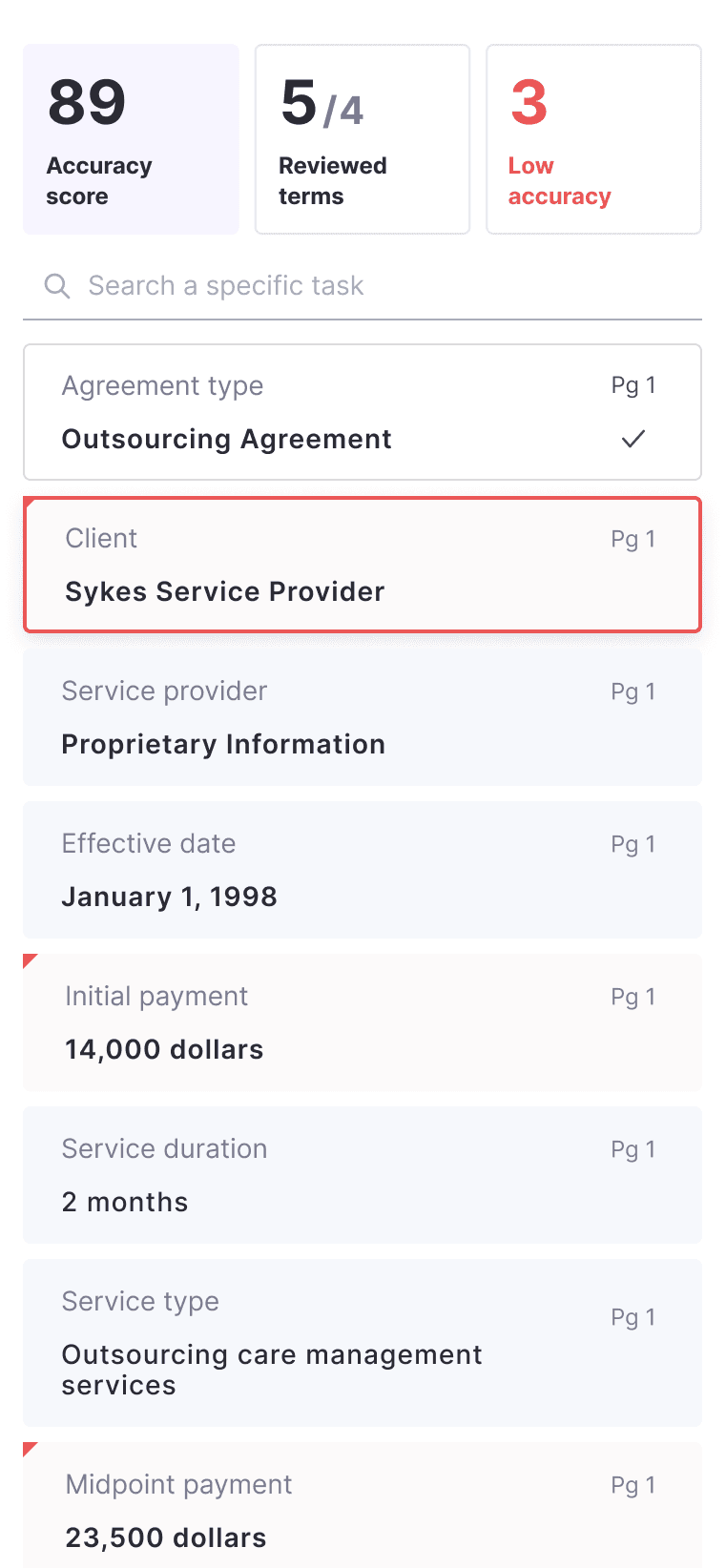

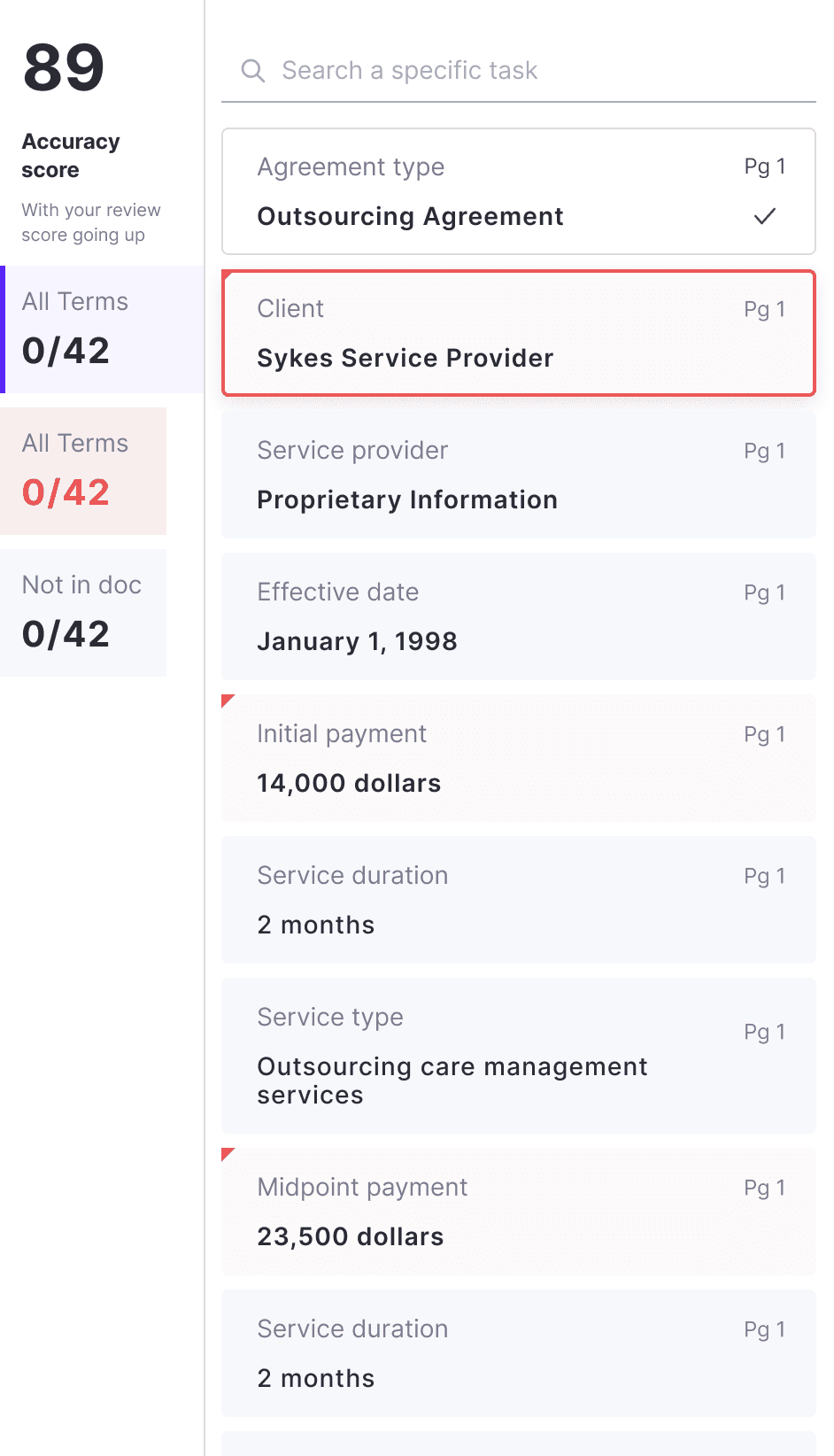

Improve Key term list navigation

The primary goal of validation is to identify errors from AI-generated results. Leveraging the model's ability to detect problematic outcomes, we implemented filtering tabs for users to address issues first and complete the rest efficiently.

From Internal Testing

Show confidence score by risk level and show score for each term are not helpful to identify problems

v1

v2

Final

Validate a little bit faster

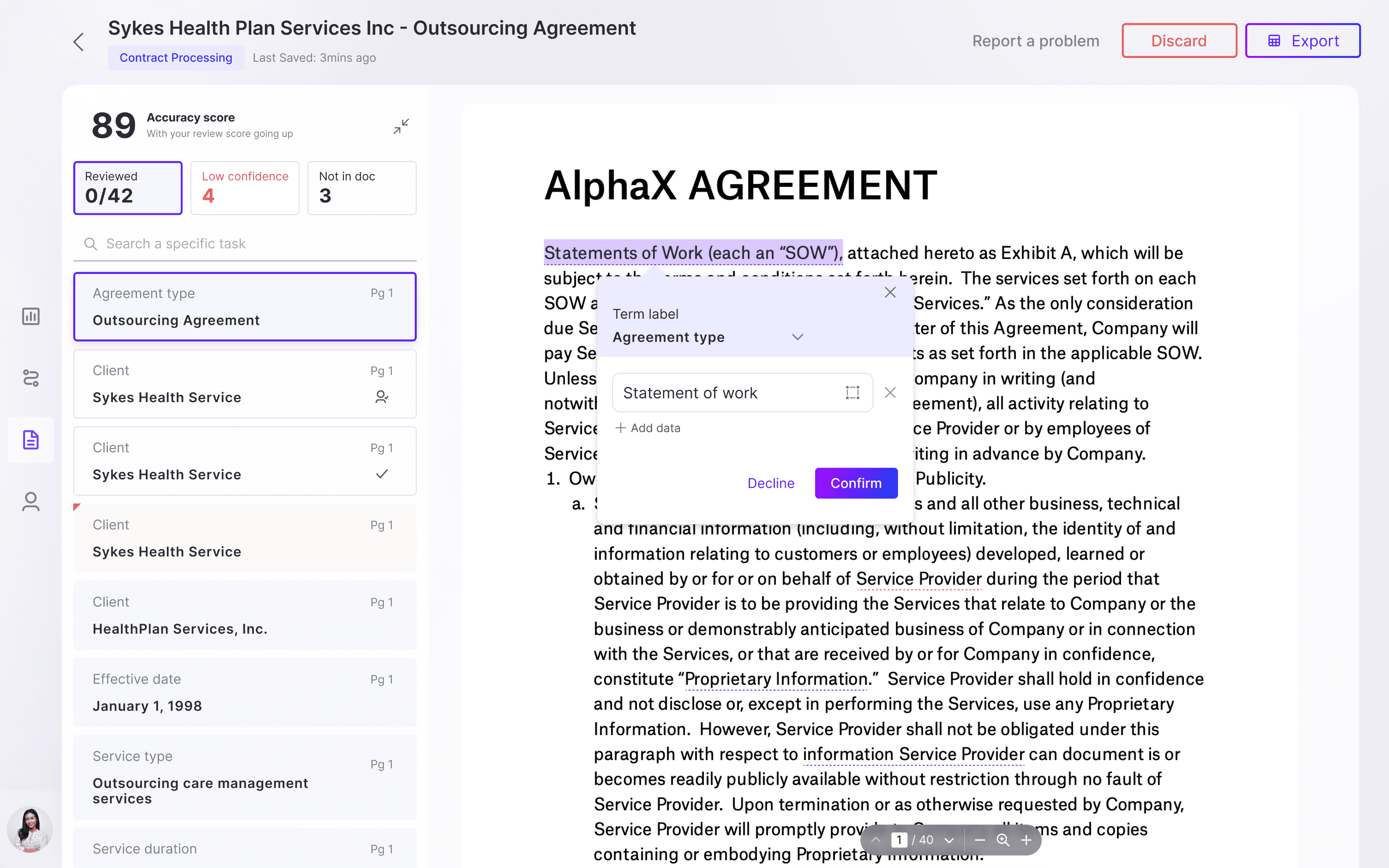

In many document processing products, actions are integrated within a key term list on the left panel, leading to a complex visual queue between error detection and editing. To simplify this process, we made actions more contextual and quicker through a floating modal that appears alongside the content.

Research Finding

A complex visual queue between document and key term list makes it challenging to navigate

Before

User need to constantly compare list content with document

After

Floating modal allows user to focus on the document side

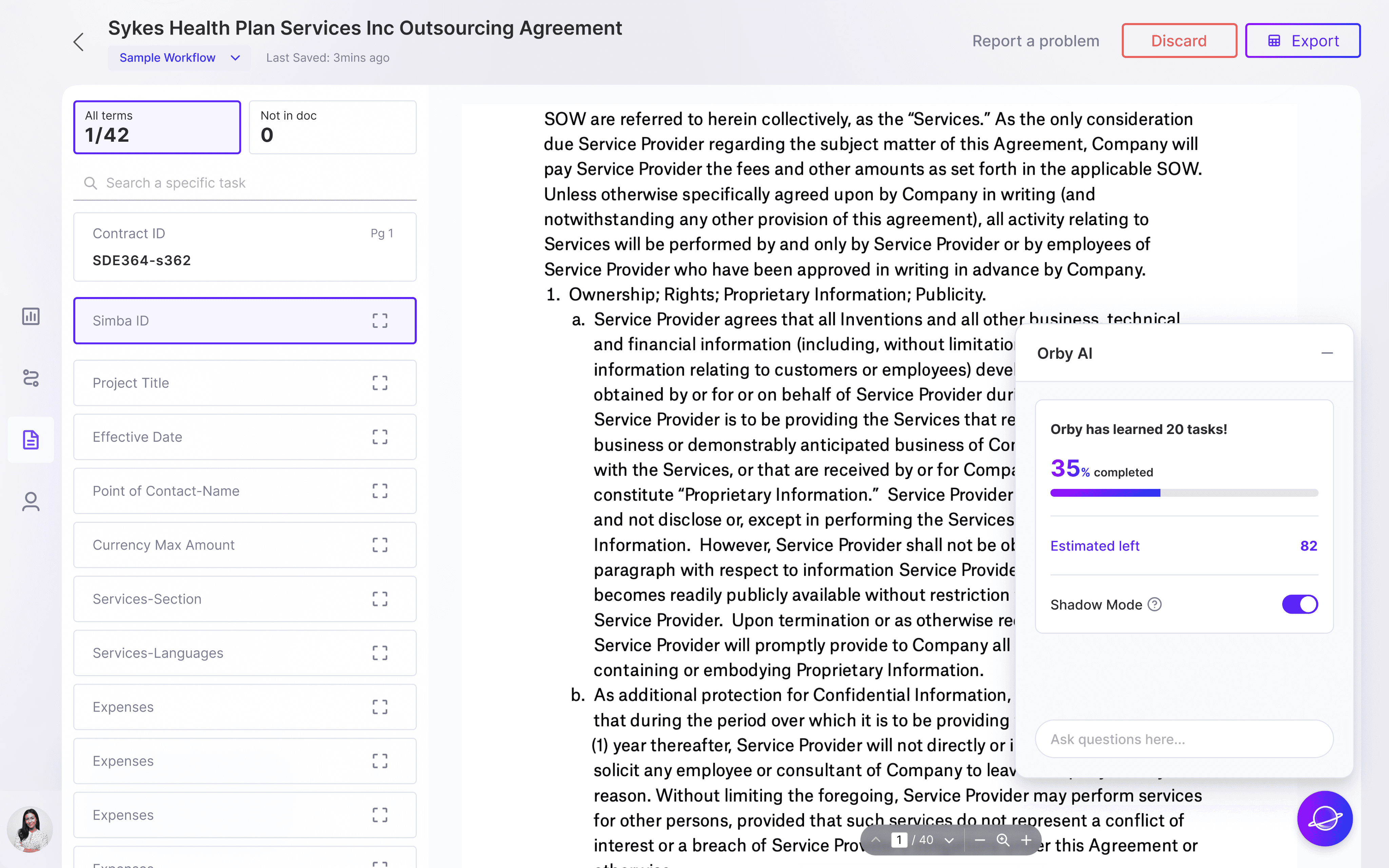

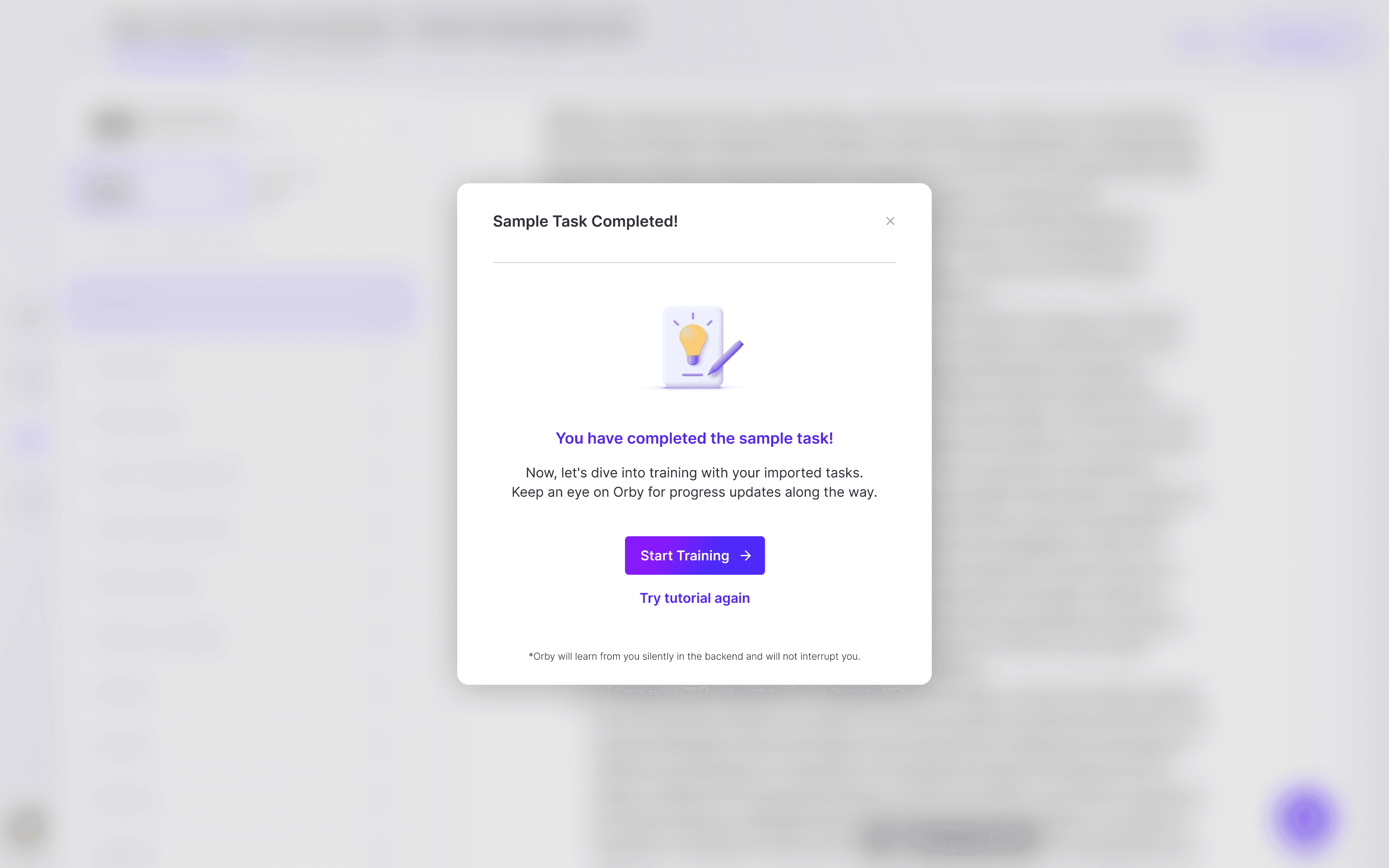

Make it effortless to start with

For users unfamiliar with AI, understanding model training can be challenging, yet it's essential for improving suggestions.

Before Orby can achieve improved automation accuracy, the model require further training from users, involving the completion of 100 - 200 manual extraction tasks. Also, training for AI is a new concept for many users. Guiding users through this process smoothly while maintaining their patience is crucial.

Strategies for navigable training

Show users progress and give feedbacks throughout the training

Break down the training into interactive steps with a sample task

Access to Orby's support through a dialogue feature

Guide user to start training

Set expectations for training

Interactive step-by-step guide

Provide visibility into the progress

Make it responsible to build trust

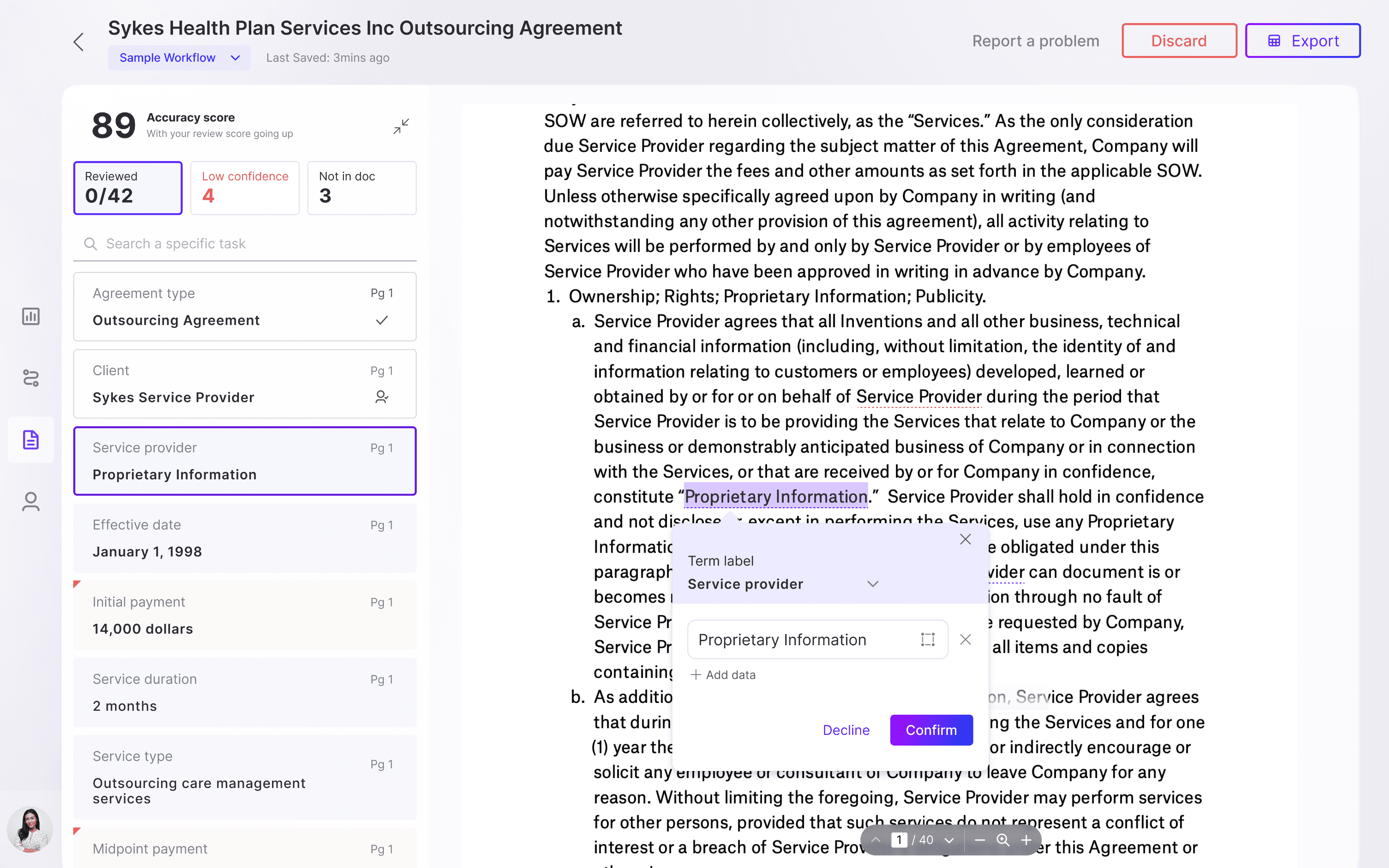

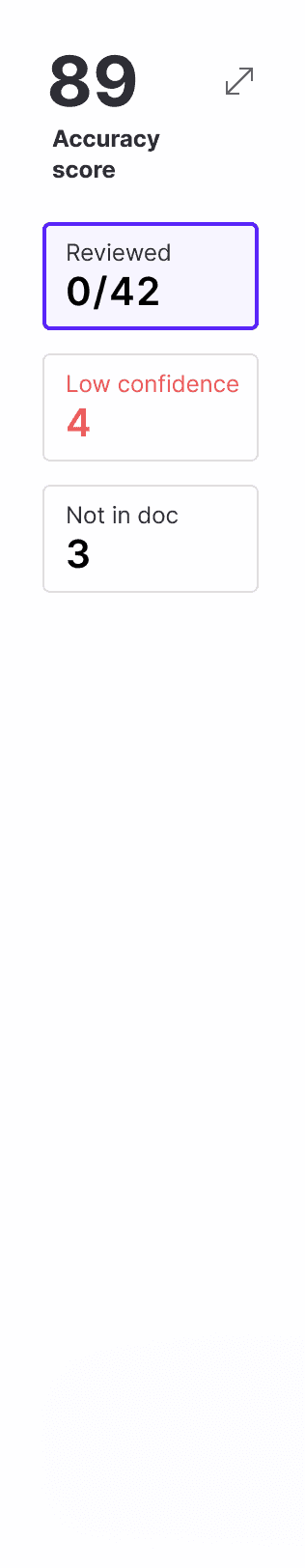

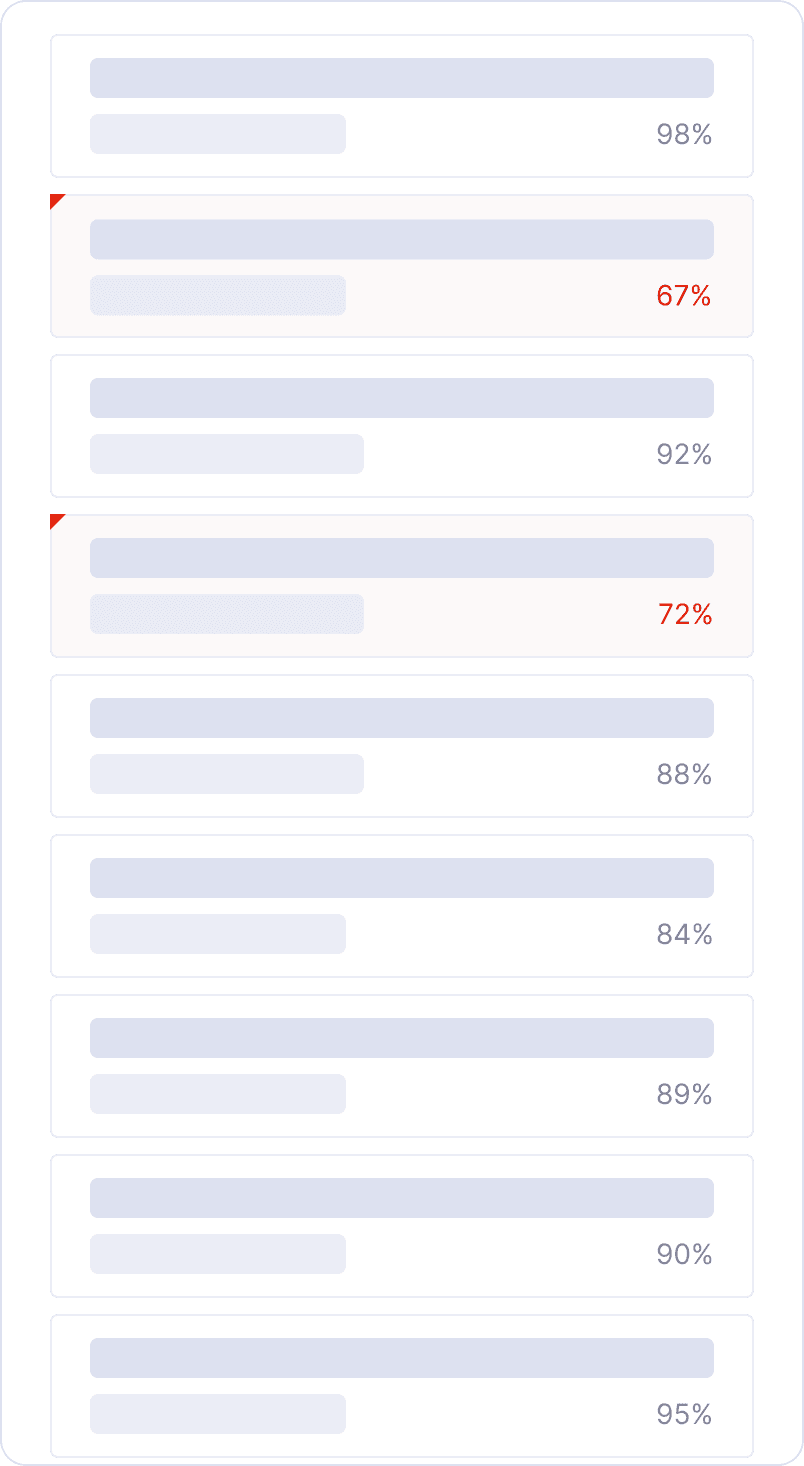

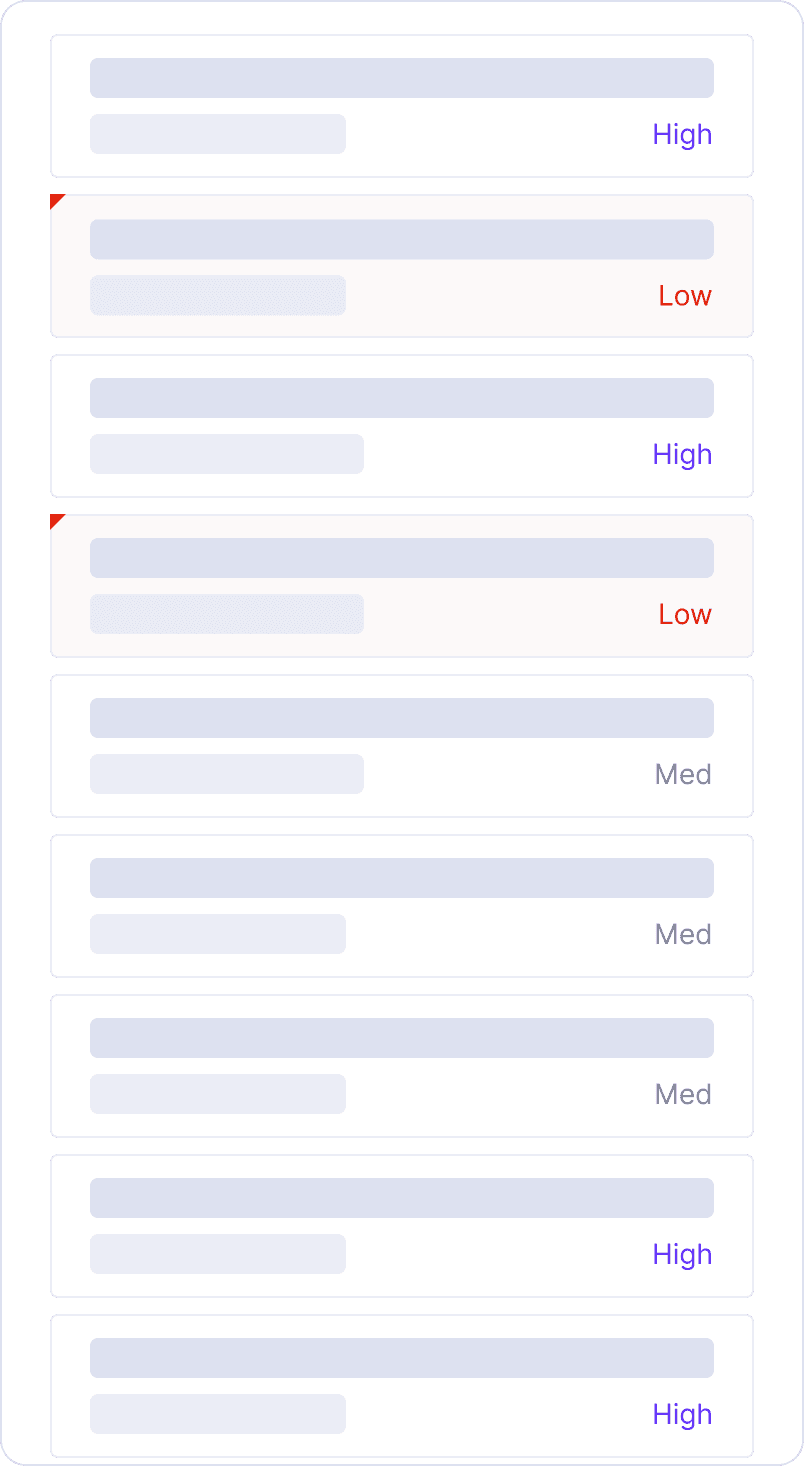

How to make confidence score easy to understand?

Orby can provide a confidence score for each suggested task and term, indicating the model's level of confidence in its accuracy. Our design goal is to assist users in prioritizing issues, so after iterating through various solutions, we've opted to only highlight tasks and terms with low confidence scores.

Final

v1

v2

What is the difference between 82 and 89?

show all levels are unnecessary

How to balance user edit & automation to calibrate trust?

Orby's predictions aren't always 100% accurate. To rectify any incorrect or missing predictions, we've introduced a floating modal for flexible edits, ensuring precise results through collaboration between the product and the user.

Flexible fix for wrong suggestions

Add missing contents

show what might be missing

show what might be inaccurate

Let user take back control when needed

If the suggested term or the entire task is totally wrong or unwanted, users can easily locate the "Decline a term" or "Discard a task" action for quick resolution.

if the term is totally wrong

if the suggestion has too many errors

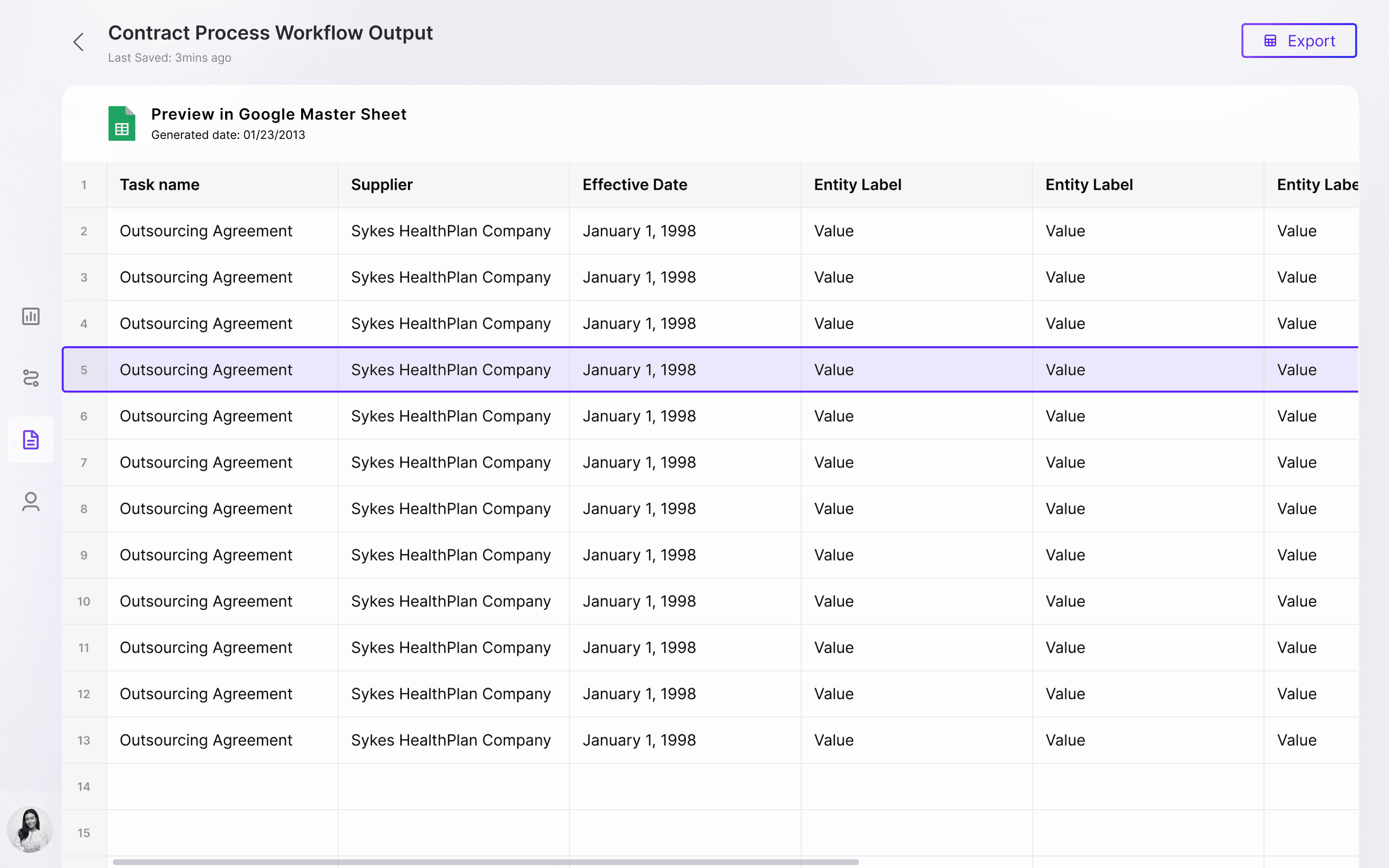

Let user feel confirmed before proceeding

Upon completion of the current workflow, we introduce an additional step for users to review the final output—the spreadsheet—to ensure task completion. This small step helps to reinforce user trust in AI co-working mode.

next workflow

outcome and reflection

85%

The outcome led to an 85% improvement in productivity, resulting in a decrease from 60 min to 5 min per task.

NPS score

The final design outcome helped client gain an improved NPS score among its target users.

Clearly outlining the capabilities and limitations of the product to set realistic user expectations and avoid unintended deception

Clearly outlining the capabilities and limitations of the product to set realistic user expectations and avoid unintended deception

Discuss with XFN stakeholders all the tradeoffs of collecting or not collecting different types of feedback.